What & Why?

This post is a about a SAN performance boost that is widely overlooked. I have only seen it implemented in very few setups, and yet it is a neat and simple approach to get additional performance out of your existing SAN and storage.

Now you are properly thinking: “That intro, really sounds like a sales pitch” and “Why the f..k does the idiot talk about both SAN and storage, that is just 2 words for the same ting” – keep reading, I bet this will change your mind.

The performance boost I’m talking about here is all about configuring buffer credits on your HBA’s, SAN switched, ISL’s and the ports on your storage system.

There are a ton of information and details about buffer credits, how they are monitored and how they are working behind the scenes, but I will keep this post simple and try to avoid deep diving into too many details.

Misunderstandings

First of all, lets clear that general misunderstanding about SAN and storage system – it is not the same. Your storage system is the storage system itself (as the word says), while the SAN is the network between your server and your storage system (SAN stands for Storage Aera Network) think of it like your LAN (Local Aera Network) but for your storage system. Please help me clear out that misunderstanding, because I hear it way to often.

While we are at misunderstandings – let’s get another one sorted out. The SAN environment is typically using fiber optics cables and light for communication. I hear a lot of storage & SAN vendors describing their communication systems being the fastest on earth, since it is communicating at the speed of light (which is 299.792.458 m/s in vacuum = a refractive index of 1). Part of the statement is true, but light traveling in fiber optic cables typically have a refractive index between 1.46 – 1.47 depending on the vendor and quality. This means that light in a fiber optic cable typically travels between 205.337.300 m/s – 203.940.448 m/s (vendor, quality, material and wavelength can all effect the refractive index). In comparison, the refractive index of air is 1.000293 at 0 °C which means that wireless communication (eg. radio waves) travels at 299.704.645 m/s (temperature and humidity can all effect the refractive index). This means that a wireless signal itself is traveling faster that a signal in a fiber optic cable. This means that your private Wi-Fi at home is sending signals at a faster speed than your fiber optic network in your enterprise SAN.

However, there are a ton of other reasons why you typically still seeing more throughput and lower latency in a fiber optic network, but the important message here is that the signal itself is faster in air that in a fiber optic cable.

This knowledge might not be relevant for your business, but if your business is depending on low latency (eg. like the trading industry), this type of information will have an impact, and you should therefore care about this type of details.

Sidenote: You end up with a lower refractive index in your optic cables if they are produced in microgravity. This is why NASA have been experimenting with producing fiber cables in sapce onboard ISS (International Space Station)

Read more here: https://www.nasa.gov/humans-in-space/optical-fiber-production/

And here (Flawless Space Fibers-1): https://www.nasa.gov/mission/station/research-explorer/investigation/?#id=8850

FOMS (Fiber Optic Manufacturing in Space) is actually producing SpaceFiber today: https://fomsinc.com/

Ahhhhhhhhhh, it is nice to have these general misunderstandings out of my body, let’s continue to the main point of this post – buffer credits.

The concept

The concept and mindset I want to change here is similar to the early days when private people changed from DSL internet lines to fiber optic internet lines. At that time many people where thinking there would be a bottleneck at all providers of internet services (e.g. streaming service, download sites) but in reality it turned out that these providers where seeing a way better distribution of their internet load, due to the fact that people where getting their content way faster, and therefore only pulled resources from them in a shorter period, leaving room for other customers to consume resources.

It is like a military special force: Fast in, do you stuff, fast out.

This old way of thinking (DSL internet line) is still how many storage admins are configuring their SAN and storage systems today. I get why, they want to protect their beloved storage system, so no single host can pull out all resources at once.

I have been a victim of the same thinking my self – but I promise you, you should try out the other approach – open up your SAN and storage systems, so all host can access their LUNs with full speed, and thereby “getting off” the storage system fast, and leaving room for other hosts to access their LUNs, with full speed.

General speaking you want to push your potential bottlenecks all the way to your storage system, since it is designed to handle this type of load and prioritization.

Server vs. Storage

I have too often heard discussion between server and storage engineers, where the server people where complaining about poor storage throughput or high disk latency, while the storage people where telling that they didn’t see any performance issues on their storage system – and both where absolutely right.

It is because the server was throttled by buffer credits, either on the HBA’s, SAN or storage system HBA’s. This is also why everything looks fine and dandy from a storage system perspective, cause the IO are throttled before it reaches the storage system, therefore it looks like the storage system can easily keep up and has nothing to do – just chilling, while the server as been ask to back off.

This is where the troubleshooting normally stops, and the server people typically starts complaining about that expensive storage system they have, but it is still not capable of delivering what their servers asks for, and the storage people typically starts complaining about the servers that are not capable of saturating their nice and expensive storage system.

This is where buffer credits come to rescue both the server and storage engineers.

Buffer credits

In a simplified way, you can think of buffer credits as how many data packets of light you can have flying on the cable without hearing anything back from them (outstanding IOs)

If you are transmitting large data packages (e.g. 64k blocks or bigger) between your host and storage system, then you don’t need that many buffer credits. If you are transmitting smaller data packages (e.g. 4k block or smaller) between you host and storage system, then you need more buffer credits in order not to get throttled on your way to the storage system. In reality, your typical data load is a mixture of all size of pages.

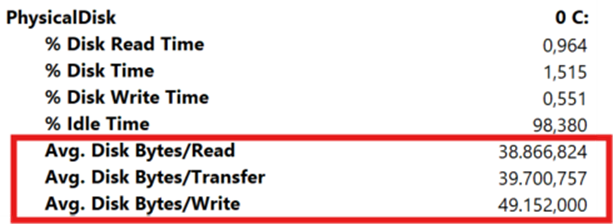

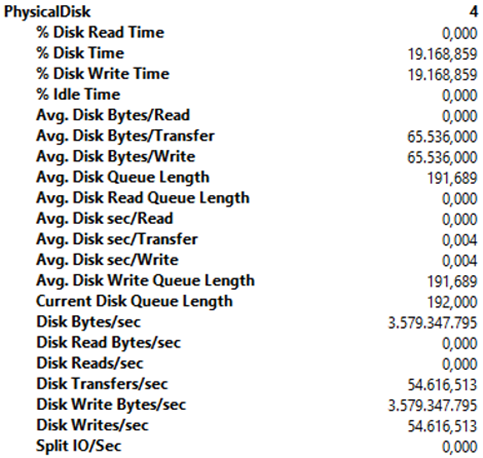

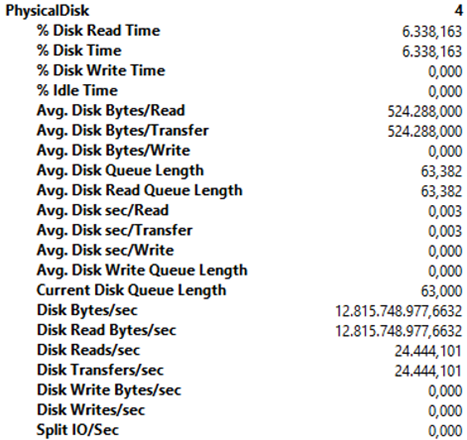

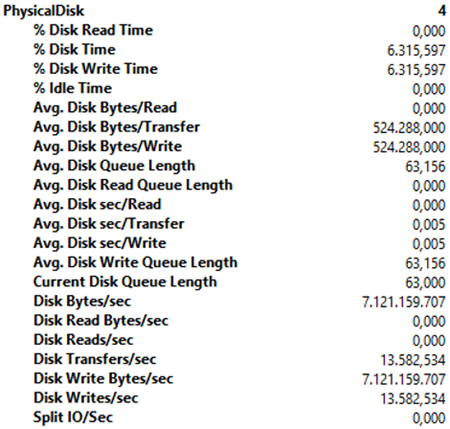

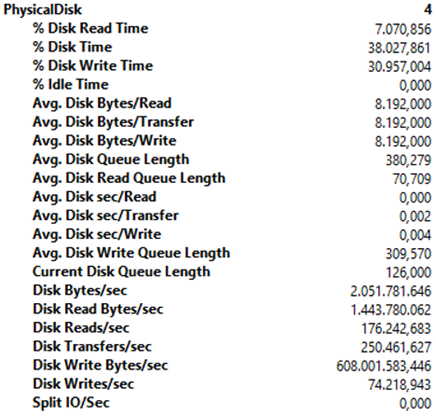

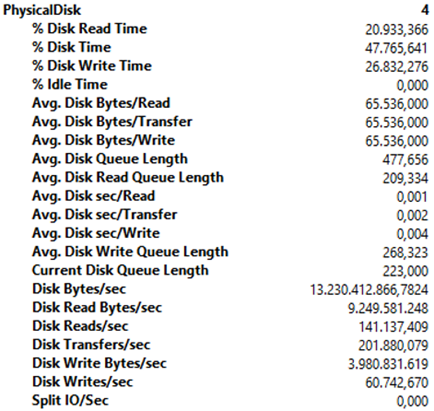

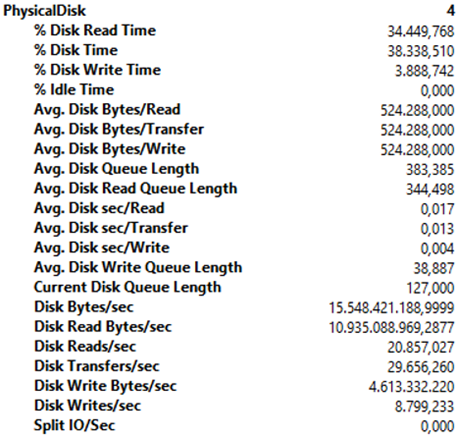

In performance monitor (perfmon.exe), under the PhysicalDisk counter, you can watch you avg. package size to your disk. Try to watch these counters while your server is running normal load, then you will see the numbers jumping up and down, due to the fact that typically data packages are all types of sizes (depending on you application workload of course)

(This screenshot is from a C drive, you will have to select the disk that is attached from the your storage system)

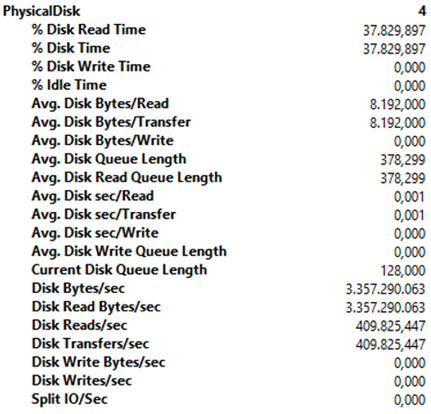

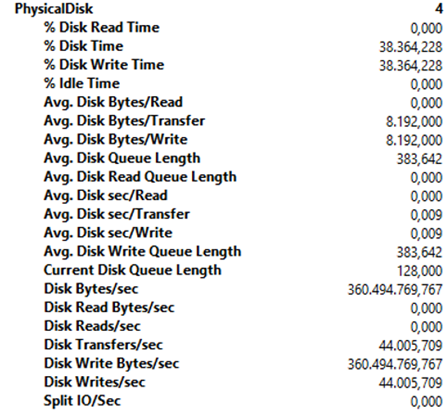

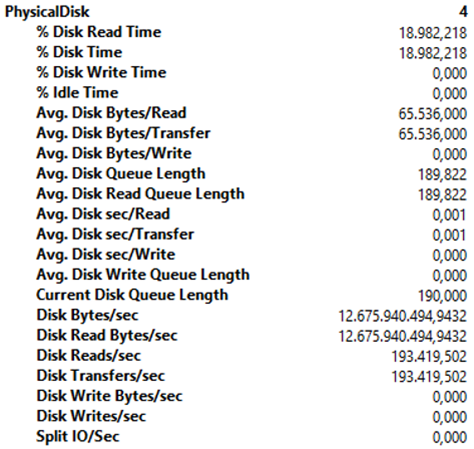

Here I will post a few screenshots of storage system disks, that all have a predefine load running. My intention is that you can get yourself familiar with how the PhysicalDisk performance counters can look like.

8K, 100% read

8K, 100% write

64K, 100% read

64K, 100% write

512K, 100% read

512K, 100% write

8K, 70% read, 30% write

64K, 70% read, 30 write

512K, 70% read, 30% write

Windows

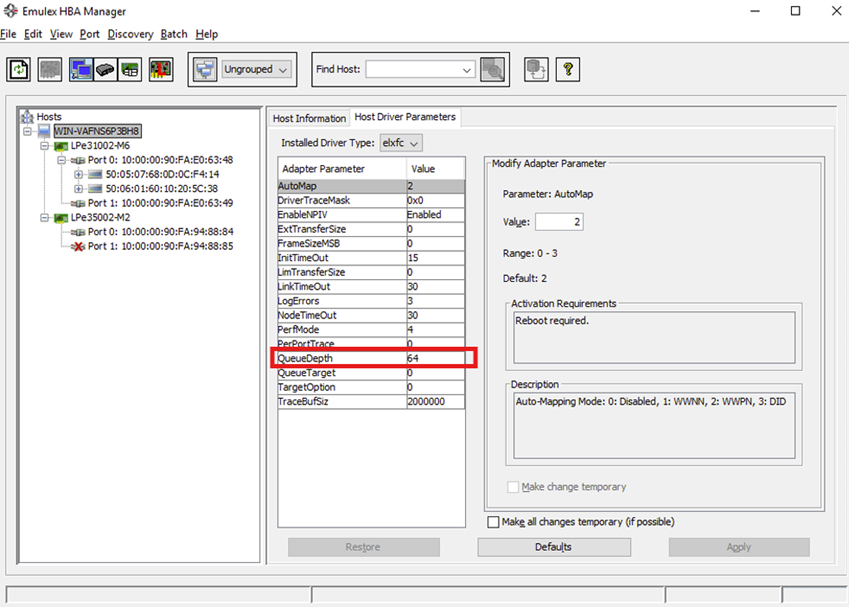

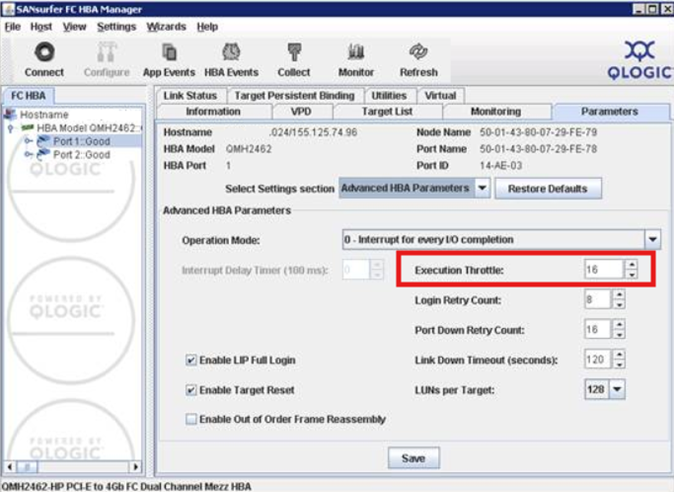

The configuration of buffer credits on HBA’s for Windows servers differs from vendor to vendor. Emulex calls it queue depth, QLogic/Marvell calls it execution throttle. The maximum allowed value differs between versions.

I don’t care about the version or vendor, I always set it to the maximum allowed value.

This is a screenshot from an old Emulex HBA Manager. This is not the max value, just an example.

This is a screenshot from an old Qlogic SANSurfer. This is not the max value, just an example.

VMware

On VMware you can use these commands to get and set the queue depth

Verify which HBA module you have

For QLogic: # esxcli system module list | grep qln

For Emulex: # esxcli system module list | grep lpfc

For Brocade: # esxcli system module list | grep bfaSet the values (whatever the max allowed value is, here I’m using 256)

For QLogic: # esxcli system module parameters set -p ql2xmaxqdepth=256 -m qlnativefc

For Emulex: # esxcli system module parameters set -p lpfc0_lun_queue_depth=256 -m lpfc

For Brocade: # esxcli system module parameters set -p bfa_lun_queue_depth=256 -m bfaReboot your host and verify the values with this command

# esxcli system module parameters list -m driverOn WMware it is also important to set Disk.SchedNumReqOutstanding to the maximum allowed value for all LUNs, to ensure you are not introducing a new bottleneck before the IO reach the storage system.

Get the current value with this command

# esxcli storage core device list -d naa.xxxSet the vanted value with this command (here is set it to 256)

# esxcli storage core device set -d naa.xxx -O 256Brocade/Broadcom SAN switches

For Brocade/Broadcom SAN switches.

You can change the assigned buffer credits to a given port by this command. I typically set it to 64 for all ports in the switch.

(I repeat this command for all ports on the switch. This command assigns 64 buffer credits to port 7)

portcfgfportbuffers --enable 7 64This command shows the buffer usages.

portbuffershowFor ISL’s (E_ports) I might set the length longer than it actually is, in order to automatically assign more buffer credits to the ISL’s.

Otherwhise use this command to change buffer credits for a E_port

portcfgeportcreditsYou could also play around with the ISL R_RDY property, depending on your ISL configuration. This is the command to use.

portCfgISLModeYour storage system

Typically, the buffer credits on your storage system HBA’s are already set fairly high. But please check your storage vendors documentation to se how it is set and if you can increase the value.

This is all settings that your average storage vendor or storage consultant would not play around with (probably because they are not aware of this), but if you are looking to gain the best performance out of your storage system, I highly recommend you making these changes in your setup.